ElasticSearch is a highly scalable open source search engine with a REST API that is hard not to love. In this tutorial we'll look at some of the key concepts when getting started with ElasticSearch.

Downloading and running ElasticSearch

ElasticSearch can be downloaded packaged in various formats such as ZIP and TAR.GZ from elasticsearch.org. After downloading and extracting a package running it couldn't be much easier, at least if you already have a Java runtime installed.

Running ElasticSearch on Windows

To run ElasticSearch on Windows we run elasticsearch.bat located in the bin folder from a command window. This will start ElasticSearch running in the foreground in the console, meaning we'll see errors in the console and can shut it down using CTRL+C.

If we don't have a Java runtime installed or not correctly configured we'll not see output like the one above but instead a message saying "JAVA_HOME environment variable must be set!". To fix that first download and install Java if you don't already have it installed. Second, ensure that you have a JAVA_HOME environment variable configured correctly (Google it if unsure of how).

Running ElasticSearch on OS X

To run ElasticSearch on OS X we run the shell script elasticsearch in the bin folder. This starts ElasticSearch in the background, meaning that if we want to see output from it in the console and be able to shut it down we should add a -f flag.

If the script is unable to find a suitable Java runtime it will help you download it (nice!).

Using the REST API with Sense

Once you have an instance of ElasticSearch up and running you can talk to it using it's JSON based REST API residing at localhost port 9200. You can use any HTTP client to talk to it. In ElasticSearch's own documentation all examples use curl, which makes for concise examples. However, when playing with the API you may find a graphical client such as Fiddler or RESTClient more convenient.

Even more convenient is the Chrome plug-in Sense. Sense provides a simple user interface specifically for using ElasticSearch's REST API. It also has a number of convenient features such as autocomplete for ElasticSearch's query syntax and copying and pasting requests in curl format, making it easy to run examples from the documentation.

We'll be looking at a combination of curl requests and screenshots from Sense throughout this tutorial and I recommend you to install Sense and use it to follow along.

Once you have installed it you'll find Sense's icon in the upper right corner in Chrome. The first time you click it and run Sense a very simple sample request is prepared for you.

The above request will perform the simplest of search queries, matching all documents in all indexes on the server. Running it against a vanilla installation of ElasticSearch produces an error in the response as there aren't any indexes.

Our next step is to index some data, fixing this issue.

CRUD

While we may want to use ElasticSearch primarily for searching the first step is to populate an index with some data, meaning the "Create" of CRUD, or rather, "indexing". While we're at it we'll also look at how to update, read and delete individual documents.

Indexing

In ElasticSearch indexing corresponds to both "Create" and "Update" in CRUD - if we index a document with a given type and ID that doesn't already exists it's inserted. If a document with the same type and ID already exists it's overwritten.

In order to index a first JSON object we make a PUT request to the REST API to a URL made up of the index name, type name and ID. That is: http://localhost:9200/<index>/<type>/[<id>].

Index and type are required while the id part is optional. If we don't specify an ID ElasticSearch will generate one for us. However, if we don't specify an id we should use POST instead of PUT.

The index name is arbitrary. If there isn't an index with that name on the server already one will be created using default configuration.

As for the type name it too is arbitrary. It serves several purposes, including:

- Each type has its own ID space.

- Different types can have different mappings ("schema" that defines how properties/fields should be indexed).

- Although it's possible, and common, to search over multiple types, it's easy to search only for one or more specific type(s).

Let's index something! We can put just about anything into our index as long as it can be represented as a single JSON object. In this tutorial we'll be indexing and searching for movies. Here's a classic one:

{ "title": "The Godfather", "director": "Francis Ford Coppola", "year": 1972 }

To index that we decide on an index name ("movies"), a type name ("movie") and an id ("1") and make a request following the pattern described above with the JSON object in the body.

curl -XPUT "http://localhost:9200/movies/movie/1" -d' { "title": "The Godfather", "director": "Francis Ford Coppola", "year": 1972 }'

You can either run that using curl or use Sense. With Sense you can either populate the URL, method and body yourself or you can copy the above curl example, place the cursor in the body field in Sense and press Ctrl/Command + Shift + V and all of the fields will be populated for you.

The response object contains information about the indexing operation, such as whether it was successful ("ok") and the documents ID which can be of interest if we don't specify that ourselves.

If we now run the default search request that Sense provides (accessible using the "History" button in Sense given that you indeed executed it) that failed before we'll see a different result.

Instead of an error we're seeing a search result. We'll get to searching later, but for now let's rejoice in the fact that we've indexed something!

Now that we've got a movie in our index let's look at how we can update it, adding a list of genres to it. In order to do that we simply index it again using the same ID. In other words, we make the exact same indexing request as as before but with an extended JSON object containing genres.

curl -XPUT "http://localhost:9200/movies/movie/1" -d' { "title": "The Godfather", "director": "Francis Ford Coppola", "year": 1972, "genres": ["Crime", "Drama"] }'

The response from ElasticSearch is the same as before with one difference, the _version property in the result object has value two instead of one.

The version number can be used to track how many times a document has been indexed. It's primary purpose however is to allow for optimistic concurrency control as we can supply a version in indexing requests as well and ElasticSearch will then only overwrite the document if the supplied version is higher than what's in the index.

Getting by ID

We've so far covered indexing new documents as well as updating existing ones. We've also seen an example of a simple search request and that our indexed movie appeared in that.

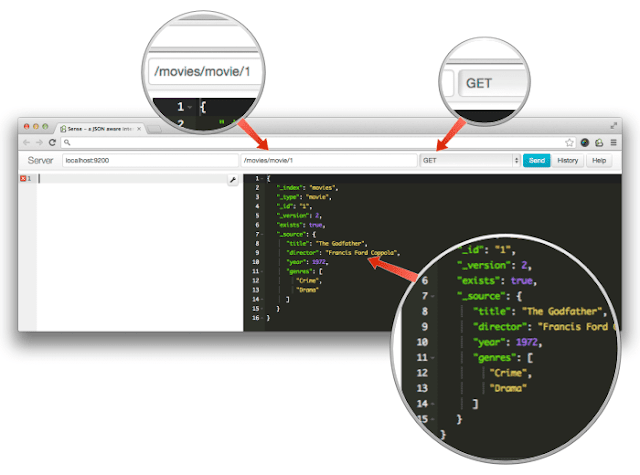

While it's possible to search for documents in the index that's overkill if we only want to retrieve a single one with a known ID. A simple and faster approach would be to retrieve it by ID, using GET.

In order to do that we make a GET request to the same URL as when we indexed it, only this time the ID part of the URL is mandatory. In other words, in order to retrieve a document by ID from ElasticSearch we make a GET request to http://localhost:9200/<index>/<type>/<id>.

Let's try it with our movie using the following request:

curl -XGET "http://localhost:9200/movies/movie/1" -d''

As you can see the result object contains similar metadata as we've saw when indexing, such as index, type and version information. Last but not least it has a property named "_source" which contains the actual document.

There's not much more to say about GET as it's pretty straightforward. Let's move on to the final CRUD operation.

Deleting documents

In order to remove a single document from the index by ID we again use the same URL as for indexing and getting it, only this time we change the HTTP method to DELETE.

curl -XDELETE "http://localhost:9200/movies/movie/1" -d''

The response object contains some of the usual suspects in terms of meta data, along with a property named "_found" indicating that the document was indeed found and that the operation was successful.

If we, after executing the DELETE call, switch back to GET we can verify that the document has indeed been deleted.

Searching

So, we've covered the basics of working with data in an ElasticSearch index and it's time to move on to more exciting things - searching. However, considering the last thing we did was to delete the only document we had from our index we'll first need some sample data. Below is a number of indexing requests that we'll use.

curl -XPUT "http://localhost:9200/movies/movie/1" -d' { "title": "The Godfather", "director": "Francis Ford Coppola", "year": 1972, "genres": ["Crime", "Drama"] }' curl -XPUT "http://localhost:9200/movies/movie/2" -d' { "title": "Lawrence of Arabia", "director": "David Lean", "year": 1962, "genres": ["Adventure", "Biography", "Drama"] }' curl -XPUT "http://localhost:9200/movies/movie/3" -d' { "title": "To Kill a Mockingbird", "director": "Robert Mulligan", "year": 1962, "genres": ["Crime", "Drama", "Mystery"] }' curl -XPUT "http://localhost:9200/movies/movie/4" -d' { "title": "Apocalypse Now", "director": "Francis Ford Coppola", "year": 1979, "genres": ["Drama", "War"] }' curl -XPUT "http://localhost:9200/movies/movie/5" -d' { "title": "Kill Bill: Vol. 1", "director": "Quentin Tarantino", "year": 2003, "genres": ["Action", "Crime", "Thriller"] }' curl -XPUT "http://localhost:9200/movies/movie/6" -d' { "title": "The Assassination of Jesse James by the Coward Robert Ford", "director": "Andrew Dominik", "year": 2007, "genres": ["Biography", "Crime", "Drama"] }'

It's worth pointing out that ElasticSearch has and endpoint (_bulk) for indexing multiple documents with a single request however that's out of scope for this tutorial so we're keeping it simple and using six separate requests.

The _search endpoint

Now that we have put some movies into our index, let's see if we can find them again by searching. In order to search with ElasticSearch we use the _search endpoint, optionally with an index and type. That is, we make requests to an URL following this pattern: <index>/<type>/_search where index and type are both optional.

In other words, in order to search for our movies we can make POST requests to either of the following URLs:

- http://localhost:9200/_search - Search across all indexes and all types.

- http://localhost:9200/movies/_search - Search across all types in the movies index.

- http://localhost:9200/movies/movie/_search - Search explicitly for documents of type movie within the movies index.

As we only have a single index and a single type which one we use doesn't matter. We'll use the first URL for the sake of brevity.

Search request body and ElasticSearch's query DSL

If we simply send a request to one of the above URL's we'll get all of our movies back. In order to make a more useful search request we also need to supply a request body with a query. The request body should be a JSON object which, among other things, can contain a property named "query" in which we can use ElasticSearch's query DSL.

{ "query": { //Query DSL here } }

One may wonder what the query DSL is. It's ElasticSearch's own domain specific language based on JSON in which queries and filters can be expressed. Think of it like ElasticSearch's equivalent of SQL for a relational database. Here's part of how ElasticSearch's own documentation explains it:

Think of the Query DSL as an AST of queries. Certain queries can contain other queries (like the bool query), other can contain filters (like the constant_score), and some can contain both a query and a filter (like the filtered). Each of those can contain any query of the list of queries or any filter from the list of filters, resulting in the ability to build quite complex (and interesting) queries.

Basic free text search

The query DSL features a long list of different types of queries that we can use. For "ordinary" free text search we'll most likely want to use one called "query string query".

A query string query is an advanced query with a lot of different options that ElasticSearch will parse and transform into a tree of simpler queries. Still, it can be very easy to use if we ignore all of its optional parameters and simply feed it a string to search for.

Let's try a search for the word "kill" which is present in the title of two of our movies:

curl -XPOST "http://localhost:9200/_search" -d' { "query": { "query_string": { "query": "kill" } } }'

As expected we're getting two hits, one for each of the movies with the word "kill" in the title. Let's look at another scenario, searching in specific fields.

Specifying fields to search in

In the previous example we used a very simple query, a query string query with only a single property, "query". As mentioned before the query string query has a number of settings that we can specify and if we don't it will use sensible default values.

One such setting is called "fields" and can be used to specify a list of fields to search in. If we don't use that the query will default to searching in a special field called "_all" that ElasticSearch automatically generates based on all of the individual fields in a document.

Let's try to search for movies only by title. That is, if we search for "ford" we want to get a hit for "The Assassination of Jesse James by the Coward Robert Ford" but not for either of the movies directed by FrancisFord Coppola.

In order to do that we modify the previous search request body so that the query string query has a fields property with an array of fields we want to search in:

curl -XPOST "http://localhost:9200/_search" -d' { "query": { "query_string": { "query": "ford", "fields": ["title"] } } }'

As expected we get a single hit, the movie with the word "ford" in its title. Compare that to a request were we've removed the fields property from the query:

Filtering

We've covered a couple of simple free text search queries above. Let's look at another one where we search for "drama" without explicitly specifying fields:

curl -XPOST "http://localhost:9200/_search" -d' { "query": { "query_string": { "query": "drama" } } }'

As we have five movies in our index containing the word "drama" in the _all field (from the category field) we get five hits for the above query. Now, imagine that we want to limit these hits to movies released in 1962. In order to do that we need to apply a filter requiring the "year" field to equal 1962.

To add such a filter we modify our search request body so that our current top level query, the query string query, is wrapped in a filtered query:

{ "query": { "filtered": { "query": { "query_string": { "query": "drama" } }, "filter": { //Filter to apply to the query } } } }

A filtered query is a query that has two properties, query and filter. When executed it filters the result of the query using the filter. To finalize the query we'll need to add a filter requiring the year field to have value 1962.

ElasticSearch's query DSL has a wide range of filters to choose from. For this simple case where a certain field should match a specific value a term filter will work well.

"filter": { "term": { "year": 1962 } }

The complete search request now looks like this:

curl -XPOST "http://localhost:9200/_search" -d' { "query": { "filtered": { "query": { "query_string": { "query": "drama" } }, "filter": { "term": { "year": 1962 } } } } }'

Filtering without a query

In the above example we limit the results of a query string query using a filter. What if all we want to do is apply a filter? That is, we want all movies matching a certain criteria.

In such cases we still use the "query" property in the search request body, which expects a query. In other words, we can't just add a filter, we need to wrap it in some sort of query.

One solution for doing this is to modify our current search request, replacing the query string query in the filtered query with a match_all query which is a query that simply matches everything. Like this:

curl -XPOST "http://localhost:9200/_search" -d' { "query": { "filtered": { "query": { "match_all": { } }, "filter": { "term": { "year": 1962 } } } } }'

Another, simpler option is to use a constant score query:

curl -XPOST "http://localhost:9200/_search" -d' { "query": { "constant_score": { "filter": { "term": { "year": 1962 } } } } }'

Mapping

Let's look at a search request similar to the last one, only this time we filter by author instead of year.

curl -XPOST "http://localhost:9200/_search" -d' { "query": { "constant_score": { "filter": { "term": { "director": "Francis Ford Coppola" } } } } }'

As we have two movies directed by Francis Ford Coppola in our index it doesn't seem too far fetched that this request should result in two hits, right? That's not the case however.

What's going on here? We've obviously indexed two movies with "Francis Ford Coppola" as director and that's what we see in search results as well. Well, while ElasticSearch has a JSON object with that data that it returns to us in search results in the form of the _source property that's not what it has in its index.

When we index a document with ElasticSearch it (simplified) does two things: it stores the original data untouched for later retrieval in the form of _source and it indexes each JSON property into one or more fields in a Lucene index. During the indexing it processes each field according to how the field is mapped. If it isn't mapped default mappings depending on the fields type (string, number etc) is used.

As we haven't supplied any mappings for our index ElasticSearch uses the default mappings for strings for the director field. This means that in the index the director fields value isn't "Francis Ford Coppola". Instead it's something more like ["francis", "ford", "coppola"].

So, what to do if we want to filter by the exact name of the director? We modify how it's mapped. There are a number of ways to add mappings to ElasticSearch, through a configuration file, as part of a HTTP request that creates and index and by calling the _mapping endpoint.

Using the last approach we could in theory fix the above issue by adding a mapping for the "director" field instructing ElasticSearch not to analyze (tokenize etc.) the field at all when indexing it, like this:

curl -XPUT "http://localhost:9200/movies/movie/_mapping" -d' { "movie": { "properties": { "director": { "type": "string", "index": "not_analyzed" } } } }'

There are however a couple of issues if we do this. First of all, it won't work as there already is a mapping for the field:

In many cases it's not possible to modify existing mappings. Often the easiest work around for that is to create a new index with the desired mappings and re-index all of the data into the new index.

The second problem with adding the above mapping is that, even if we could add it, we would have limited our ability to search in the director field. That is, while a search for the exact value in the field would match we wouldn't be able to search for single words in the field.

Luckily, there's a simple solution to our problem. We add a mapping that upgrades the field to a multi field. What that means is that we'll map the field multiple times for indexing. Given that one of the ways we map it match the existing mapping both by name and settings that will work fine and we won't have to create a new index.

Here's a request that does that:

curl -XPUT "http://localhost:9200/movies/movie/_mapping" -d' { "movie": { "properties": { "director": { "type": "multi_field", "fields": { "director": {"type": "string"}, "original": {"type" : "string", "index" : "not_analyzed"} } } } } }'

So, what did we just do? We told ElasticSearch that whenever it sees a property named "director" in a movie document that is about to be indexed in the movies index it should index it multiple times. Once into a field with the same name (director) and once into a field named "director.original" and the latter field should not be analyzed, maintaining the original value allowing is to filter by the exact director name.

With our new shiny mapping in place we can re-index one or both of the movies directed by Francis Ford Coppola (copy from the list of initial indexing requests above) and try the search request that filtered by author again. Only, this time we don't filter on the "director" field (which is indexed the same way as before) but instead on the "director.original" field:

curl -XPOST "http://localhost:9200/_search" -d' { "query": { "constant_score": { "filter": { "term": { "director.original": "Francis Ford Coppola" } } } } }'

Where to go from here

We've covered quite a lot of things in this article. Still, we've barely scratched the surface of ElasticSearch's goodness.

For instance, there's a lot more to searching with ElasticSearch than we've seen here. We can create search requests where we specify how many hits we want, use highlighting, get spelling suggestions and much more. Also, the query DSL contains many interesting queries and filters that we can use. Then there's of course also a whole range of facets that we can use to extract statistics from our data or build navigations.

As if that wasn't enough, we can go far, far beyond the simple mapping example we've seen here to accomplish wonderful and interesting things. And then there are of course plenty of performance optimizations and considerations. And functionality to find similar content. And, and, and...

But for now, thanks for reading! I hope you found this tutorial useful on your way to discovering the great open source project ElasticSearch.